In England, domestic violence accounts for one-third of all assaults involving injury. A crucial part of tackling this abuse is risk assessment – determining what level of danger someone may be in so that they can receive the appropriate help as quickly as possible. It also helps to set priorities for police resources in responding to domestic abuse calls in times when their resources are severely constrained. In this research, we asked how we can improve on existing risk assessment, a research question that arose from discussions with policy makers who questioned the lack of systematic evidence on this.

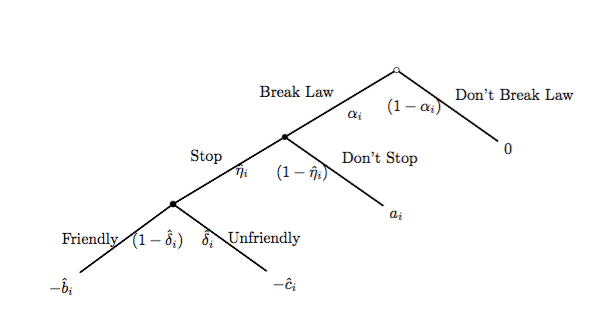

Currently, the risk assessment is done through a standardised list of questions – the so-called DASH form (Domestic Abuse, Stalking and Harassment and Honour- Based Violence) – which consists of 27 questions that are used to categorise a case as standard, medium or high risk. The resulting DASH risk scores have limited power in predicting which cases will result in violence in the future. Following this research, we suggest that a two-part procedure would do better both in prioritising calls for service and in providing protective resources to victims with the greatest need.

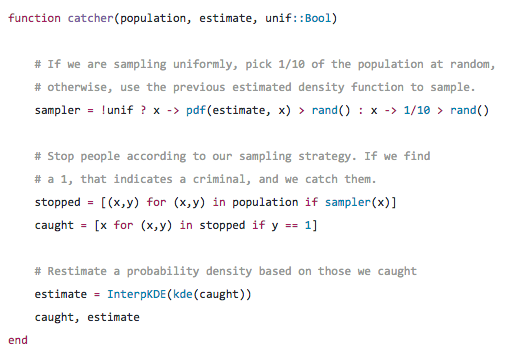

In our predictive models, we use individual-level records on domestic abuse calls, crimes, victim and perpetrator data from the Greater Manchester Police to construct the criminal and domestic abuse history variables of the victim and perpetrator. We combine this with DASH questionnaire data in order to forecast reported violent recidivism for victim-perpetrator pairs. Our predictive models are random forests, which are a machine-learning method consisting of a large number of classification trees that individually classify each observation as a predicted failure or non-failure. Importantly, we take the different costs of misclassification into account. Predicting no recidivism when it actually happens (a false negative) is far worse in terms of social costs than predicting recidivism when it does not happen (a false positive). While we set the cost of incurring a false negative versus a false positive at 10:1, this is a parameter that can be adjusted by stakeholders.

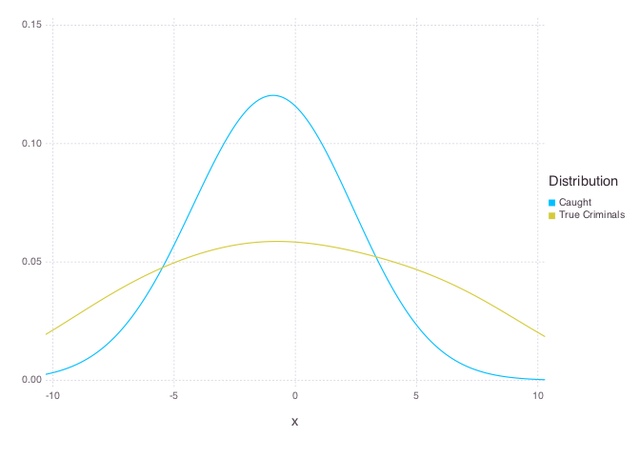

We show that machine-learning methods are far more effective at assessing which victims of domestic violence are most at risk of further abuse than conventional risk assessments. The random forest model based on the criminal history variables together with the DASH responses significantly outperforms the models based on DASH alone. The negative prediction error – that is, the share of cases that would be predicted not to have violence yet violence occurs in the future – is low at 6.3% as compared with an officer’s DASH risk score alone where the negative prediction error is 11.5%. We also examine how much each feature contributes to the model performance. There is no single feature that clearly outranks all others in importance, but it is the combination of a wide variety of predictors, each contributing their own ‘insight’, which makes the model so powerful.

Following this research, we have been in discussion with police forces across the United Kingdom and policy makers working on the Domestic Abuse Bill to think how our findings could be incorporated in the response to domestic abuse. We hope this research acts as a building block to increasing the use of administrative datasets and empirical analysis to improve domestic violence prevention.

This post is based on the following article:

Grogger, J., Gupta, S., Ivandic, R. and Kirchmaier, T. (2021), Comparing Conventional and Machine-Learning Approaches to Risk Assessment in Domestic Abuse Cases. Journal of Empirical Legal Studies, 18: 90-130. https://doi.org/10.1111/jels.12276

Media coverage

Connect with the author

Ria Ivandić ’13 is a Researcher at LSE’s Centre for Economic Performance (CEP). She is an alum of the Barcelona GSE Master’s in Economics.